MANAS Q&A

Q: What is the workflow of MANAS?

A: Take plant recognition for example:

User submits a plant image through API or on MANAS website;

The plant image is uploaded to the IPFS cache;

The system accesses the distributed AI model for plant recognition;

The model uses the spare computing power of miners to carry out the computing task;

The result from computing will be sent back to the user, who will be charged a fee;

The fee will be shared among the providers of both the algorithm and the computing power.

The image will be deleted from the IPFS cache.

Q: How do the services on MANAS work?

A: Every service on MANAS corresponds to an algorithm model. Currently, the services launched on MANAS include debit/credit card recognition, document recognition, animal recognition, plant recognition, face recognition. They all use image-based recognition, which is the niche area Steve Deng’s team specializes in.

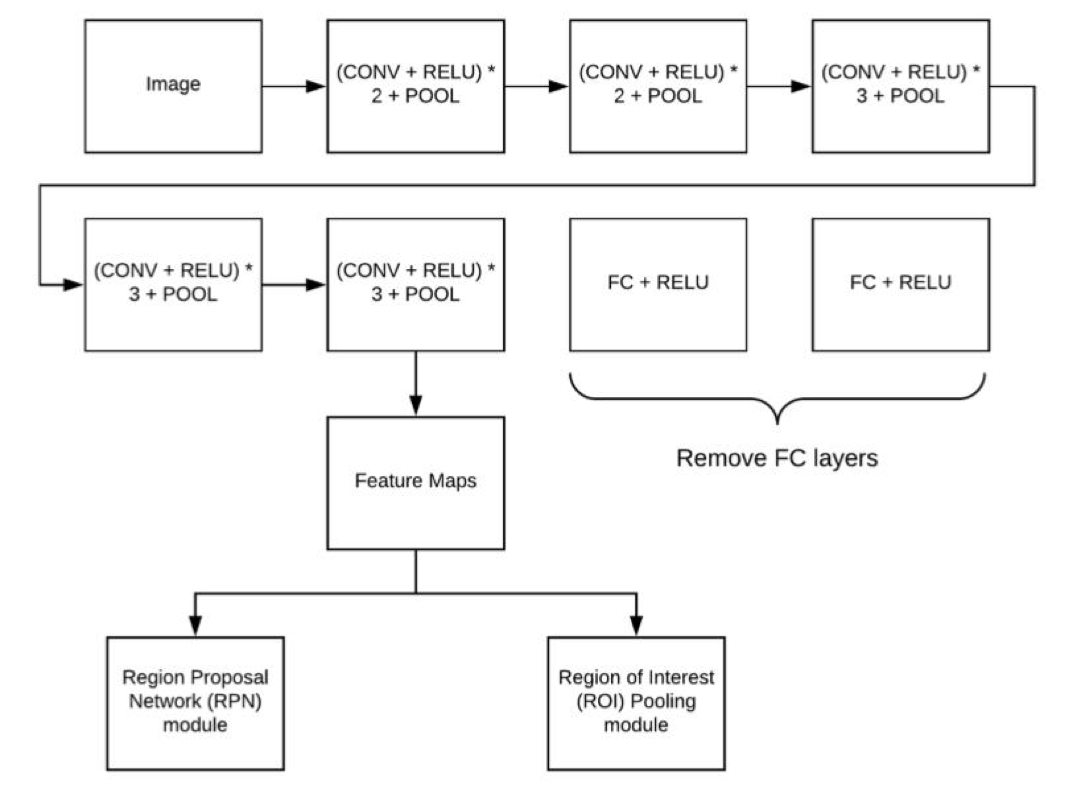

In the following services, Matrix uses FasterR-CNN network. This is an upgraded convolutional neural network, and its workflow is as below:

When a colour image is uploaded, it first goes through the CNN layer, where its features will be extracted. Matrix uses a pre-trained VGG16 network for feature extraction. The VGG network removes fully connected layers keeping only the convolutional parts;

The features extracted from the convolutional layer will be divided into two groups and sent to RPN (Region Proposal Network) and the ROI pooling network respectively. RPN is responsible for proposing a region for the image and separating foreground and background. This information will assist in making the final conclusion;

The ROI Pooling is responsible for collecting the inputted feature maps and proposals and extracting proposal feature maps to be sent for subsequent object type identification on the fully connected layer;

The classifier is used to make the final conclusion regarding the type and location of the image.

The diagram below demonstrates the entire network structure:

Q: How are the services on MANAS trained?

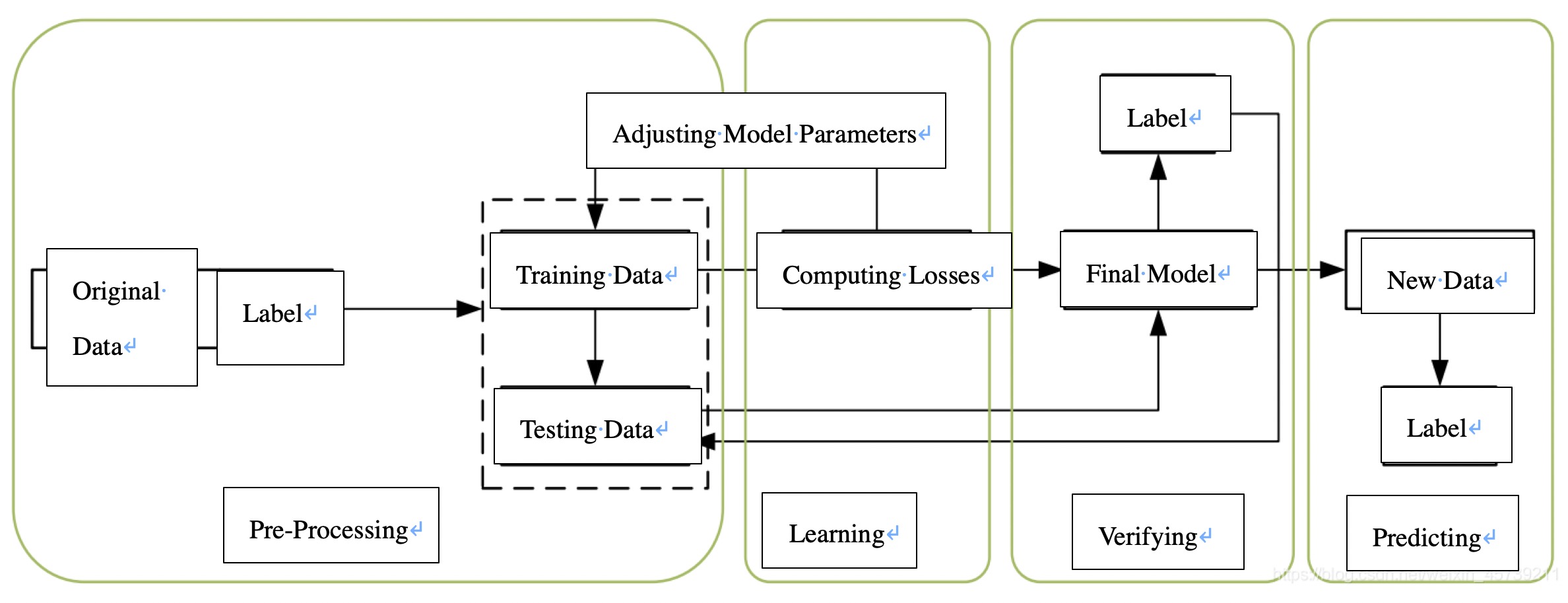

A: The training process of MANAS service models is shown in the diagram below. First, we need to label the data, and the method of labeling depends on the specific issue. For image recognition, these labels are image types. A label should include the subject’s position information in the image (usually a rectangle shape containing the subject) and its type. The data still needs further pre-processing such as normalizing. Afterwards training can start by determining the value of neural network parameters (usually through weighting and biasing).

At the beginning of the training process, these parameters are assigned random values through probability distributions. Next, we enter the data and labels into the neural network simultaneously. The neural network will compute the data based on the current weight and output the label resulting from computing. Usually, this label will be different from the previous label, and the difference is the loss function. Based on the loss function, we can get the error contribution (or gradient) of every parameter in the neural network by calculating partial derivatives, and update weights based on error contribution. This process repeats through several iterations until the loss function is close to 0, which means the deep neural network based on the trained data is already capable of drawing accurate conclusions. At this point, we should further test the network model’s accuracy by introducing other data than have been used for training. If found lacking in accuracy, the network may need structural changes or further data training.

Q: What data is used for training AI service models on MANAS?

A: For training the plant recognition service, we mainly used the image database of ImageNet. For debit/credit card and document recognition services, we have used several databases that be accessed for algorithm training but not for data searches such as Alipay’s database, as well as expired document photos from public security and banks. We used a small sample size for training to guarantee quality. For training face recognition models, we used public data on the internet along with data from paid third-party volunteers.

Q: Are all the services currently available on MANAS trained using MANTA?

A: Currently, the services on MANAS are trained using centralized methods before their decentralized deployment. After completing the development and testing for MANTA, the Matrix team will switch to MANTA for model training. We will attract more algorithm scientists to use MANTA for training models.

Q:What advantages do AI services on MANAS have compared with those on other platforms?

A: Besides decentralization, scalability, accessibility to anyone, image recognition algorithms on MANAS have an edge over those on other platforms thanks to tech innovations.

Generally speaking, the biggest hurdle to image recognition is that images come in different dimensions. Take facial recognition for example. There can be smaller or larger faces in an image, and to recognize them all is not easy. To solve this problem, most platforms use slide windows to scan images and image pyramids whose dimensions can change to detect faces of different sizes. However, this method is not ideal in either speed or accuracy. MANAS’s facial recognition uses a fully convolutional network capable of end-to-end proposal region accessing with drastically improved speed and accuracy compared with traditional methods.

The Matrix AI Service Platform MANAS will be welcoming a major update in the third quarter. Besides introducing many highly-functional AI applications, this update will also bring to people a function that allows them to access AI services through API. And this video will show you how to do that. First, open your browser and enter manas.matrix.io. Once you’re in the MANAS home page, click your account, which is on the top right corner. This should bring you to a new interface. Once you’re there, click the User API and then you’ll see an access token. Now what we need to do is to create a new token. So we click New Token and we save this newly-created token by clicking Save Token. Now you’ll need this token later so please keep it somewhere safe. After obtaining your access token, you can start creating your “post request”, as shown in the pictures here. And after that, you can integrate API into your applications or services, and you can access them using API.

Now, generally speaking, the workflow of API is shown in the chart here. The user will send an order to the corresponding service from a terminal that’s integrated with API. The terminal will send a request to the API server, and the server will make a response and send a feedback back to the user.

Let’s use an example to explain this process: say for instance, an App developer wants to develop a plant recognition App. And for his App, he wants to use our plant recognition service available on MANAS. So now he uses an account to create an API and integrates this API into his App. And now when the user wants to recognize a plant, he’ll simply take a photo of this plant and upload the photo. And the API will send a request to MANAS’s distributor AI server and the AI server will access a plant recognition algorithm and distribute the task to an idle miner for computing. The results from computing will be sent back to the App developer’s server, and the user will receive feedback saying that this flower is probably Calliopsis. After the user gets his answer, the App developer will be charged a certain amount of MAN on his account on MANAS for a fee for accessing API, and this fee will be shared by the service provider and the miner. The addition of this new feature gives people an alternative to using MANAS’s services directly on the platform. Now developers can also integrate these services into their own applications. A plant recognition App can help children learn about plants, and developers can also integrate MANAS’s services into the workflow of their own Apps. For instance, a Fin-tech App can integrate MANAS’s face recognition for payment verification. With the support of API, MANAS will be in a better position to commercialize his services and put them to full use in different scenarios. Industries will now be able to access AI services on a massive scale to improve their efficiency, and financial services will also be able to make this part of what they offer. In the long run, every user will be able to find in the ecosystem, the AI service geared for their individual need.